- #Gpu shark command line for mac os x

- #Gpu shark command line install

- #Gpu shark command line drivers

- #Gpu shark command line driver

- #Gpu shark command line software

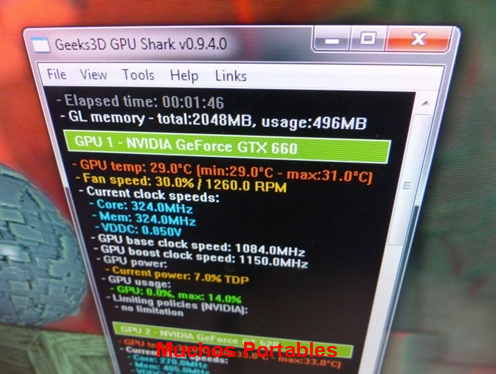

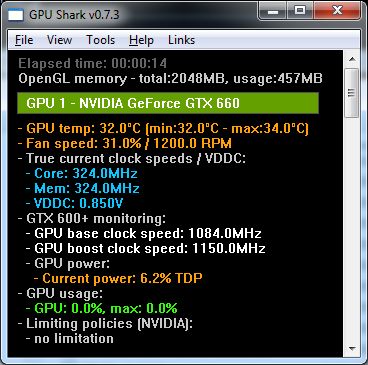

GPU Shark is available for Microsoft Windows only (XP, Vista and Seven). My mission is empty and with default settings for Syria, I am parked with running engines in front of Beirut. What is GPU Shark GPU Shark is a simple, lightweight (few hundred of KB) and free GPU monitoring tool, based on ZoomGPU, for NVIDIA GeForce and AMD/ATI Radeon graphics cards.

#Gpu shark command line software

I am on standalone DCS and OpenXR is my default API inside the Oculus Software using the Link Cable. I think this is the simulation graph, so maybe the issue is here? When you compare the ingame FPS graph you can clearly see, the green line gets more spikes in 2.2 whereas in 2.7.2 it is nearly flat. When 5 fps are missing (just because the gpu load drops) you can feel this clearly in VR when the stuttering gets more and more noticeable. I don't know if this is an engine issue with 2.2 Open Beta or Oculus SDK issue but this clearly costs valuable FPS and you can feel it while flying. It gets worse when flying in active mission. 5 JeGX Downloads GPU Shark 0.25.0.0 GPUShark-0.25.0.0. GPU load with DCS 2.2 Open Beta looks like this (92% GPU-load and 36 FPS): GPU load with DCS 2.7.2 Open Beta looks like this (99% GPU-load and 38 FPS): I can reproduce the issue (I manually switched between both updates): Since 2.2 something has changed in the DCS engine it seems.

#Gpu shark command line drivers

I checked my ASW settings, ASW is off (forced ASW 45 fps, 30 fps is working when enabled).Īgain: I was running this setup and exactly same drivers yesterday and GPU load worked just fine.

#Gpu shark command line install

install NVIDIA GRID manager in the hypervisor from XenServer command line.

#Gpu shark command line driver

I triple checked my setup, did run complete slow repair with DCS (vanilla, no mods) and re-installed my graphics card driver (NVIDIA 528.24 Win11 64bit) with DDU. GPU virtualization with Citrix XenDesktop, using NVIDIA. I regularly stress test my setup around Beirut and GPU load has always been 99% for me here (which is fine). This is very obvious in regions with some buildings around. Also the FPS gets magically capped at 45 fps and then spikes to 60 fps but GPU-load never reaches 99% as before. Since update 2.2 my gpu-load is all over the place, very often only around 70-80 % gpu load on empty map in Syria.

I did not experience any CPU-bottleneck the last days, even with heavy online multiplayer on 4YA Syria server. Therefore my GPU load is always around 99% because I technically can not reach 90 fps. I play normally uncapped in VR (no ASW, no fixed FPS) with my Quest Pro. Tried to allocate 1024.00 MiB (GPU 0 8.00 GiB total capacity 6.13 GiB already allocated 0 bytes free 6.73 GiB reserved in total by PyTorch) If reserved memory is > allocated memory try setting max_split_size_mb to avoid fragmentation.Can somebody check if you also got some serious gpu-load issues since OpenBeta 2.2?

but when I use that I get SAME memory problem? so I tried to use the 16-bit weights in 4GB sd-v1-4.ckpt instead which I read somewhere is what you should do if memory issues. I though my problem was I was using the big 32-bit weights by using the 7GB sd-v1-4-full-ema.ckpt file. an Xcode project for iPhone OS that displays the converted shark model.

#Gpu shark command line for mac os x

'export' is not recognized as an internal or external command,Īnyway I'm not sure if that's just a bad hack or workaround which slows things down massively (4x slower?) The converter is provided as a command line tool for Mac OS X and transforms the. I had already tried using export on the "Anaconda Prompt (Miniconda3)" console I was told to use to run the python script DefaultCPUAllocator: not enough memory: you tried to allocate 2359296 bytes. Storage = zip_file.get_storage_from_record(name, numel, torch._UntypedStorage).storage()._untyped() Load_tensor(dtype, nbytes, key, _maybe_decode_ascii(location))įile "C:\Users\nda\envs\ldm\lib\site-packages\torch\serialization.py", line 997, in load_tensor Return _load(opened_zipfile, map_location, pickle_module, **pickle_load_args)įile "C:\Users\nda\envs\ldm\lib\site-packages\torch\serialization.py", line 1046, in _loadįile "C:\Users\nda\envs\ldm\lib\site-packages\torch\serialization.py", line 1016, in persistent_load Pl_sd = torch.load(ckpt, map_location="cpu")įile "C:\Users\nda\envs\ldm\lib\site-packages\torch\serialization.py", line 712, in load Model = load_model_from_config(config, f"")įile "scripts/txt2img.py", line 50, in load_model_from_config Loading model from models/ldm/stable-diffusion-v1/model.ckptįile "scripts/txt2img.py", line 240, in main (ldm) C:\stable-diffusion\stable-diffusion-main>python scripts/txt2img.py -prompt "a close-up portrait of a cat by pablo picasso, vivid, abstract art, colorful, vibrant" -plms -n_iter 5 -n_samples 1 (base) C:\stable-diffusion\stable-diffusion-main>conda activate ldm (base) C:\Users\User>cd C:\stable-diffusion\stable-diffusion-main Just went this guide on installing this and running the first example I got a memory error.

0 kommentar(er)

0 kommentar(er)